Language Is a Strange Loop

Ok, so humans used to remember phone numbers, a lot of them, but today most of us remember very few, because we have outsourced memory to our devices.

Have we been worse off as a species because of that?

Probably not. Memorizing multiple, seven-digit sequences is indeed impressive, but I don’t really miss it.

But what about writing?

Today people outsource their ability to write, and although it bums me out, because I put in my ten thousand hours to get good at it. I don’t suspect writing will be missed much by the next generation. My 17-year-old daughter is about to go to college, and she has a wide choice of options, because she went to early College high school and will graduate with an associates degree. She won’t miss writing when it’s entirely machine-generated. She hates it, and I suspect is quite good at prompting ChatGPT.

Students are using AI to generate essays that they used to write themselves –and who can blame them? How is an analysis of a Shakespearean theme or an essay on capital punishment going to be relevant for them when they get out in job market which will require very little writing? And if there is a need to write, they’ll use LLMs. Recently, a popular business magazine let leak that their journalists are encouraged to use large language models to produce stories, which makes sense to them, because this digital magazine only wants clicks, attention. If you can put out 100 articles a week by a single journalist, rather than one or two, what would stop you?

Why do professors even make students write traditional essays? The students must think it pointless, and for them, they are probably right. Administrators use LLMs to write emails and reports, and if you ask them about it, they feel absolutely no remorse for using them. And why should they? Why spend so much time composing an email when you can prompt a system to draft it. After reading it over and making a few changes, why wouldn’t you send it?

AI generated text is also used by professors. There was a recent story in the news https://www.newsweek.com/college-ai-students-professor-chatgpt-2073192 where a professor was “caught” responding to student work using LLM-generated text. Can you blame him? Imagine the archetypal freeway flyer, the person who teaches freshman composition at multiple college campuses in multiple districts and has to jump on the freeway to make it to their next class. They teach five or more composition classes per semester for very little pay. If they could use technology to make their lives easier and give them more time to do what they love, like writing and reading, do you think every single one of them is going to say no?

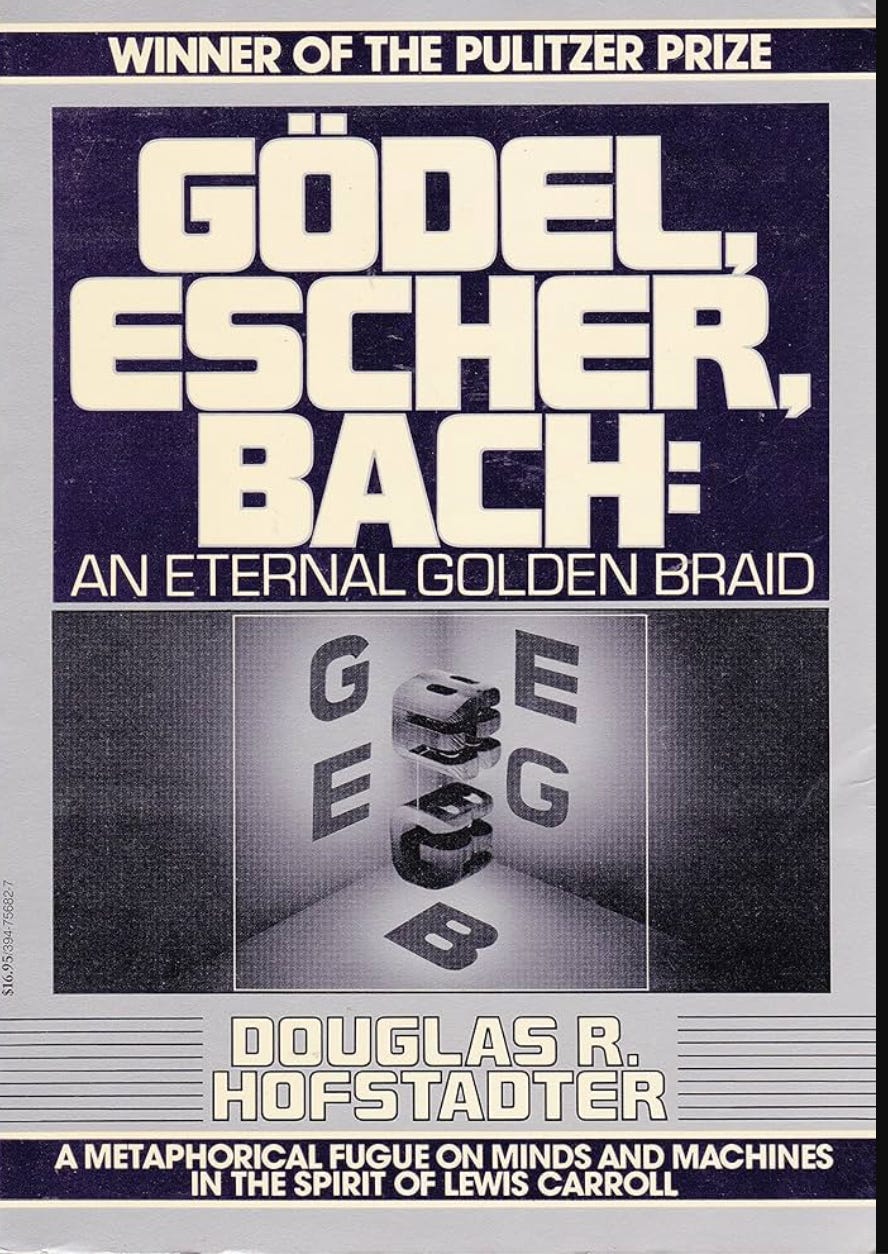

Within a few years, it will be culturally acceptable to use AI to grade student AI-generated essays, which is a strange loop unpredicted by Douglass Hofstadter. AI grading AI. AI chasing a tail that ends up being the tail of AI.

I have a friend who worked for an AI startup that used machines to analyze the writing of potential employees, to reveal their physiological profile, but the problem is that once LLMs became available those same employees started using it to write their cover letters, so it’s AI examining itself in a strange loop, analyzing its own personality (or lack thereof).

Much of the new content we see online, book reviews, articles, comments on articles, is AI generated, even if it’s carefully prompted by an individual whose name is on the article. In other words, people who write articles are using AI to write their articles, and AI scrapes them off the internet and emulates them, so AI writing becomes sets of linguistic loops with no challenge to syntax and form, which by extension means no challenge to the political status quo.